If you have been following the Lakehouse movement over the last few years, something crystal clear has been made - if you are on Databricks, deltalake is the way to go; for everyone else, use iceberg. Both of these platforms have their pros and cons (mostly pros). On a cons list though, setting up a local spark instance on either of these platforms to communicate with a cloud object store always requires a lot of hoops to jump through. If you don’t believe me, this is the laundry list of spark configs required just to get Apache Iceberg to talk to GCS using application auth creds:

I had written a prior substack article on this and ranted about how unobvious it was to find the correct jars to get it working.

Anyways, for a while, I had come to accept that having to whack my head against a desk repeatedly and find the right kind of voodoo for spark to talk to the cloud gods was just a rite of passage. Then, in the final week of May (Circa 2025), that all changed. The gods of duckdb bestowed upon us a different take of the Lakehouse where they addressed several of the shortcomings of the Iceberg camp, most notably the massive headache that is the Iceberg catalog. Enter ducklake.

What is Ducklake?

Ducklake is Duckdb’s solution to enable a Lakehouse via duckdb; simply put, manage your metadata through a duckdb database (or Postgres if you want), and then store the data elsewhere (more than likely a cloud object store such as S3 or GCS). And as one would expect with the simplicity that duckdb is known for, ducklake is as easy as it gets to implement. Below is all it takes to get ducklake up and running to read/write your data files to GCS:

Key things here:

No special jars needed

no java runtime environment needed

no guessing the dozen or so spark configs, hoping things just work out

Alright, Let’s Create and Update A Table

There are no special secrets here; just straight sql to create and update the table, and it’s a breeze:

And how do we query it? Easy:

Time To Kick It Up a Notch

A big headache that I run into often is some data being on-prem and some being in cloud. How does one join all that stuff? It usually involves the following steps:

setup a replication pipeline to copy the on-prem data to the object store in the cloud

transform the landed data in the object store to a consumable format

join it to the cloud data via spark or some other engine

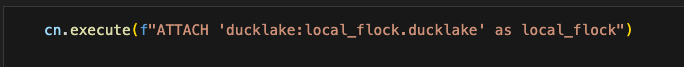

But given we just saw with Ducklake that I can easily attach a ducklake with a single ATTACH statement, why not go ahead and simulate attaching an on-prem/local copy of some data and see if we can just join it all?

Alright, we were able to create/attach a new lakehouse that is local. Let’s create a table that has some SPI data in it, which is typically what one would keep on-prem and not put in the public cloud:

Great, that worked as well. Now time for the coup de grace…

WOW - We just joined a cloud lakehouse table to an on-prem lakehouse table, and again with Duckdb and ducklake, this was absolutely as easy as it gets…you’re welcome 😆.

Side Note - That was my poor attempt in chatGPT to take a super saiyan fusion and change it to 2 ducks fusing…good enough for today 🤡.

Where Do We Go From Here?

With this tutorial, one can get up and running with a lakehouse or lakehouses in a matter of a few minutes - that to me is a major game changer and what I’ve always loved above duckdb. Simply put, the speed to get things going in duckdb is unmatched. And the overhead is very low.

I know the spark evangelists out there will say things like “But but, can duckdb churn through petabytes of data?”

No, and duckdb has never wanted to be a platform like that

In this world of “big data”, if you are smart and don’t want to break the bank, you will structure your queries as best as possible to target specific partitions when you need to work with large tables vs. charging your org $5k for a single query because you simply had to query the entire history of a dataset that no one asked for 😁.

Additionally, the simple illustration of joining tables across lakehouses really starts to open a new frontier. Imagine if you have some data sitting in S3, GCS, and locally. With ducklake, you are less than a dozen lines of code from connecting the 3.

At this point, the only elephant in the room for duckdb and this new ducklake is governance, especially if one wants to consider it enterprise grade. In order for large scale orgs to massively adopt this platform, we will need a way to easily secure tables and columns for users. Hopefully, duckdb labs has something like that in their backlog ready to cook soon.

I’m curious to see where others take this, but for me, if I can avoid spark and still be able to put my data up in the cloud, i’m sold.

Thanks for Reading,

Matt

It will indeed be interesting to see how the data community reacts to DuckLake and what is coming in the future, knowing DuckDB, I'm sure there will be more coming.

Absolutely! For easily ingesting data into Ducklake, check out sling at https://docs.slingdata.io, there is a CLI as well as a Python interface.